People who build everything from entertainment experiences to financial management face a dilemma: how can you scale what you’re building for broader consumption, yet maintain the personalization that makes it special? A fundamental tension exists between building something individualized, and scaling it to consumers such as visitors at a theme park, or gamers exploring the latest Zelda adventure. True disruption happens when we overcome the idea that one must sacrifice personalization to achieve mass production — like it has in advertising, recommendations, and web search.

Artificial Intelligence practitioners, especially in natural language understanding, dialogue, and cognitive modeling, face the same issue: how can we personalize our models for all audiences without relying on unscalable efforts such as writing specific rules, building dialogue trees, or designing knowledge graphs? Catherine Havasi believes we can remove this dichotomy and achieve “mass personalization.” In this session we’ll discuss how to understand domain text and build believable digital characters. We’ll talk about how adding a little common sense, cognitive architectures, and planning is making this all possible.

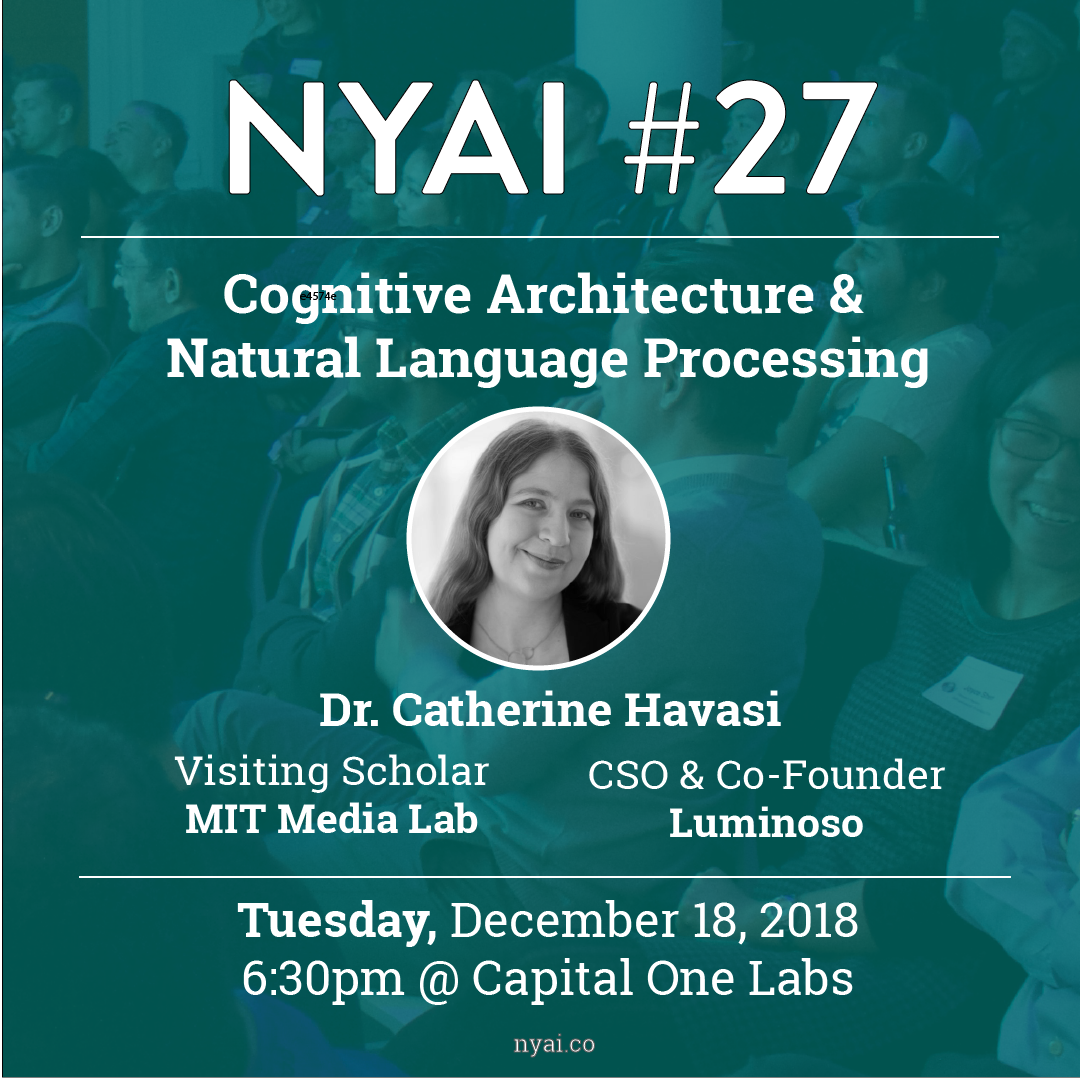

About the Speaker

Dr. Catherine Havasi is a technology strategist, artificial intelligence researcher, and entrepreneur. In the late 90s, she co-founded the Common Sense Computing Initiative, or ConceptNet, the first crowd-sourced project for artificial intelligence and the largest open knowledge graph for language understanding. ConceptNet has played a role in thousands of AI projects and will be turning 20 next year. She has started several companies commercializing AI research, including Luminoso where she acts as Chief Strategy Officer. She is currently a visiting scientist at the MIT Media Lab where she works on computational creativity and previously directed the Digital Intuition group.

Resources

- ConceptNet is located here and is available for use: http://conceptnet.io. We (mostly my co-director) run an awesome blog that talks about how to use ConceptNet, NLP/ML, and Ethics in AI: http://blog.conceptnet.io. The Numberbatch embedding is here: https://github.com/

commonsense/conceptnet- numberbatch - Manaal Faruqui’s code for retrofitting: https://github.com/mfaruqui/

retrofitting - Luminoso, the 2010 spin-out that helps companies get actionable insights from customer feedback using transfer learning and common sense, is here: www.luminoso.com

- Here’s the video of the Media Lab panel with Disney Imagineering: https://www.youtube.com/watch?

v=V5uV1vR2M6g - The DARPA Machine Common Sense project: https://www.darpa.mil/program/

machine-common-sense - The Allen Institute’s Mosaic Common Sense project: https://mosaic.allenai.org

- Here’s the 2017 Semeval ConceptNet paper which started the frequent use of retrofitting in top benchmarking systems: http://www.aclweb.org/

anthology/S17-2008 - Here’s ATOMIC, the new social/causal knowledge graph: https://arxiv.org/abs/1811.

00146 - Yuanfudao Research’s use of attention and ConceptNet to do good story understanding: http://aclweb.org/anthology/

S18-1120 - Here’s the system currently winning the Story Cloze task: https://arxiv.org/abs/1811.

00625 - Here’s the NAACL paper on attribute discrimination that I mentioned that also looked at relation extraction with less task success than wished: http://aclweb.org/anthology/

S18-1162 Here’s it’s poster at NAACL: https://blog.conceptnet.io/ 2018/06/naacl2018-poster.pdf - More on the Media Lab’s Narratarium project: https://www.media.mit.edu/

projects/narratarium/overview/

Previous Event Photos

Presented by